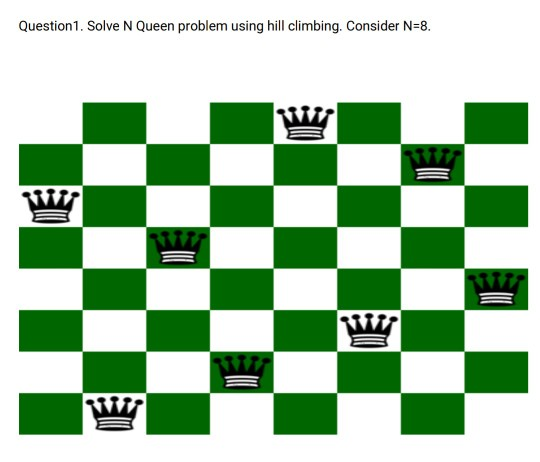

If you implement simple hill climbing algorithm you will reach the local maximum and the algorithm terminates. Suppose you are at the point shown by the current state. The example present in the image is taken from the book, Artificial Intelligence: A Modern Approach. Stochastic hill climbing can actually perform better in many cases. Random-restart hill climbing: Works on the philosophy of "If you don't succeed, try, try again". *Considered good if state has many successors (like thousands, or millions). The probability of selection can vary with the steepness of the uphill move.Two well-known methods are:įirst-choice hill climbing: generates successors randomly until one is generated that is better than the current state. Stochastic hill climbing, a variant of hill-climbing, chooses a random from among the uphill moves. The loop terminates when it reaches a peak and no neighbour has a higher value. Hill-climbing is a search algorithm simply runs a loop and continuously moves in the direction of increasing value-that is, uphill.

Block world problem using hill climbing Offline#

On the other hand, if it is an offline learning task with the entire available data in hand, then performance is the main constraint, and the stochastic approaches are advisable. If it is part of some online learning system that is operating on a batch of data, then there is a strong time constraint, but weak performance constraint (next batches of data will correct for erroneous bias introduced by first batch of data). As you might have suspected, the answer is always: it depends on your algorithm's priorities. A stochastic hill climbing approach, while slower, is more robust to these issues, and the optimization routine has a higher likelihood of reaching the global peak in comparison to the steepest hill climbing algorithm.Įpilogue: This is a good question which raises a persistent question when designing a solution or choosing between various algorithms: the performance-computational cost trade-off. Real world problems deal with noisy and missing data. This would repeat until its cooled down enough or a certain preset number of iterations have completed. In comparison, a simulated annealing algorithm would actually jump away from a global peak, return back and jump away again. It can also use early stopping if a global peak is found. Obviously, for a simple problem with only one peak, the steepest hill climbing is always better. Improvements like Simulated Annealing ameliorate this issue by allowing the algorithm to move away from a local peak, and thereby increasing the likelihood that it will find the global peak. In such cases, when this algorithm starts at a random solution, the likelihood of it reaching one of the local peaks, instead of the global peak, is high. However, real world problems are typically of the non-convex optimization type: there are multiple peaks.

The steepest hill climbing algorithms works well for convex optimization.

0 kommentar(er)

0 kommentar(er)